Golden Paths, Guardrails, and Why Every Platform Needs a Catalog

Platform Engineering Guardrails, Backstage, Crossplane vs Terraform, and a Kyverno PDB policy

Welcome back to Podo Stack. This week we zoom out. The last three issues covered individual tools — image pulling, autoscaling, eBPF networking. But tools don’t help if your engineers can’t find them, use them safely, or provision infrastructure without filing a ticket.

This week: the platform layer. The boring stuff that makes everything else work.

🏗️ The Pattern: Platform Engineering Guardrails

Here’s something I see a lot. A team builds a shiny Internal Developer Platform. Self-service. Kubernetes. The works. Then they write a 50-page “Platform Usage Guide” and email it to all engineers.

Nobody reads it. Someone deploys a public S3 bucket. Chaos.

Documentation is not a guardrail. A guardrail is code.

Gates vs Guardrails

Think of a highway. The old model is a tollbooth — you stop, show your papers, wait for approval. That’s a Change Advisory Board. It works, but it kills velocity.

Guardrails are the barriers on the sides of the road. You drive at full speed. If you try to go off the edge, something stops you. No human in the loop.

In practice, this means automated policies that either warn or block — but never require manual approval when rules are followed.

Three layers

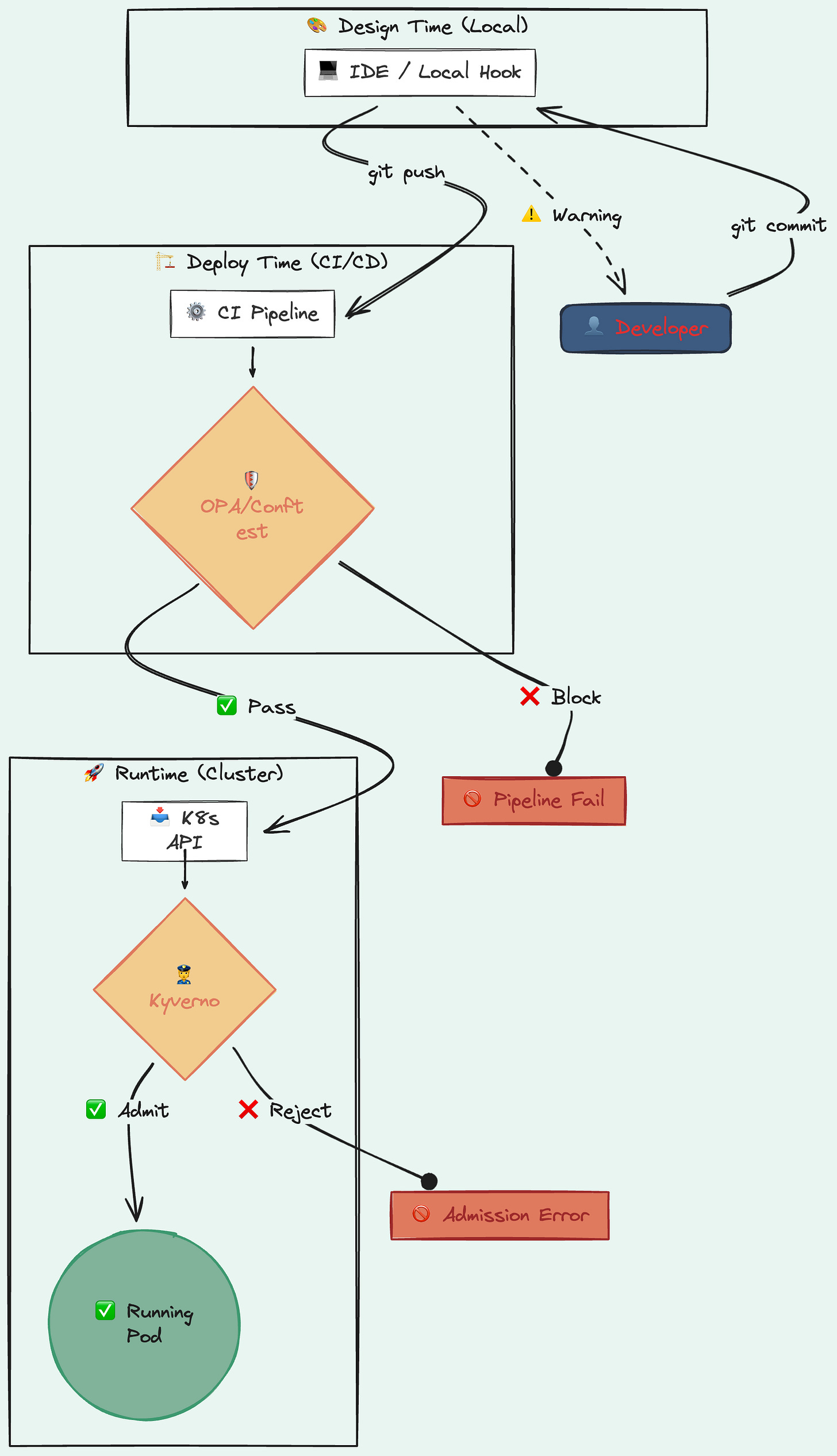

Good guardrails exist at every stage:

Design time — your IDE flags that you’re using a banned instance type. Fix it before it hits Git.

Deploy time — OPA or Conftest checks your manifests in CI. No memory limits? Pipeline fails with a clear message.

Runtime — Kyverno or Gatekeeper intercepts the API call. Pod running as root? Rejected.

Remember the Kyverno policies from Issue #1 (disallow :latest) and Issue #2 (require labels)? Those are runtime guardrails. Today we add another one below.

Start soft

One mistake I’ve seen: teams go full enforcement on day one. Engineers feel like a robot is slapping their hands. Start with 80% of guardrails in `Audit` mode. Let people see the warnings, understand the rules. Then gradually flip to `Enforce`.

Links

🧰 The Unsexy Tool: Backstage Software Catalog

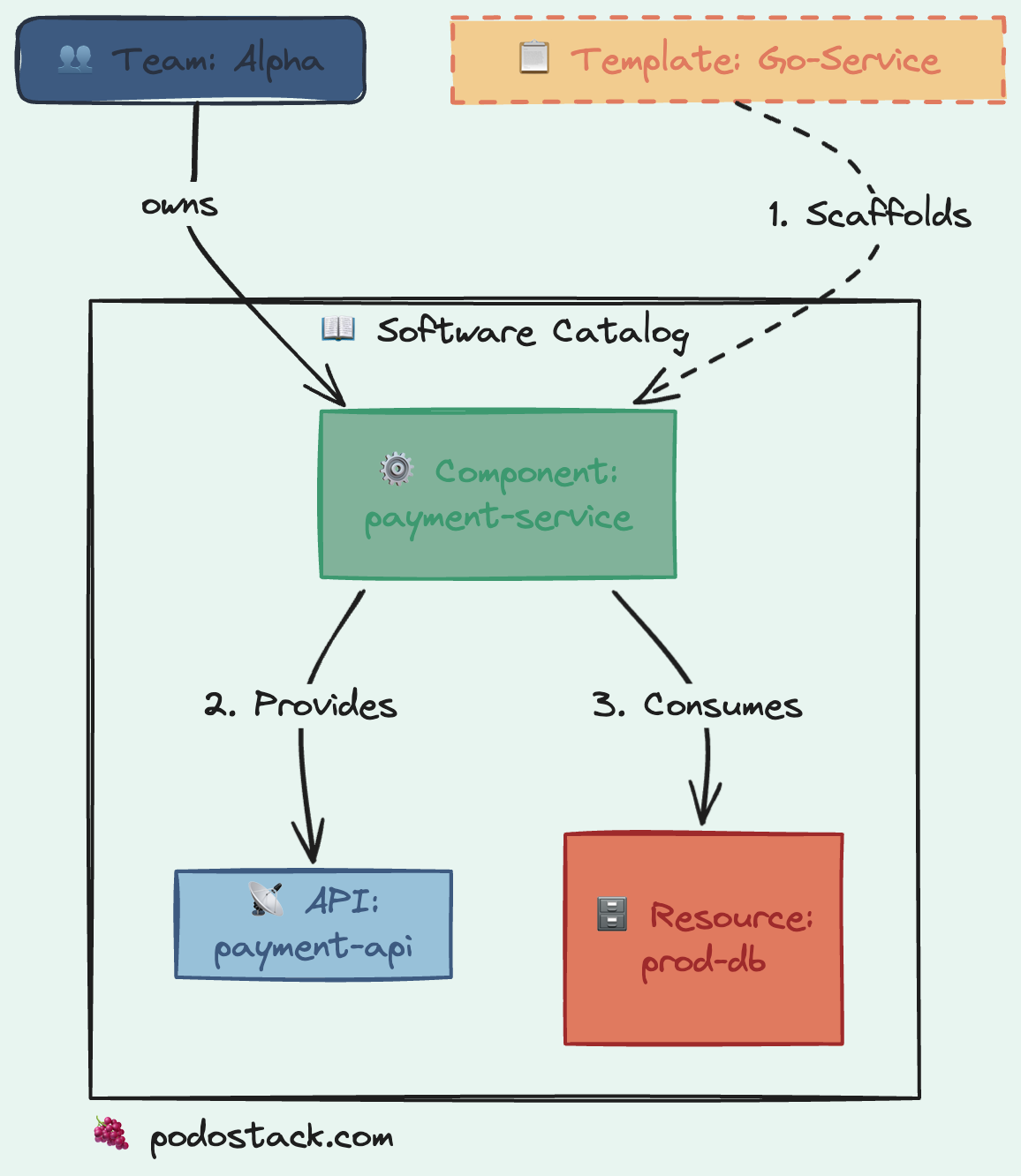

Nobody gets excited about a catalog. There’s no demo that makes the crowd gasp. But here’s what happens without one: engineers Slack each other “who owns the payment service?” and nobody knows where the API docs live.

Backstage is a CNCF Incubating project, originally from Spotify. It’s been around since 2020. Not new, not flashy. But it solves the “where is everything?” problem better than anything else I’ve seen.

Why it works

The trick is catalog-info.yaml — a file that lives next to your code. Developers own it. Backstage auto-discovers it from your Git repos.

apiVersion: backstage.io/v1alpha1

kind: Component

metadata:

name: payment-service

annotations:

github.com/project-slug: acme/payment-service

spec:

type: service

owner: team-alpha

lifecycle: production

providesApis:

- payments-apiThat’s it. Now Backstage knows this service exists, who owns it, what APIs it exposes, and what it depends on.

The real power: Golden Paths

Backstage’s Scaffolder lets you define templates. New microservice? Click a button, fill a form, get a repo with CI/CD, Dockerfile, monitoring, and catalog-info.yaml — all pre-configured. Three minutes instead of three days.

The platform team controls the templates, not the developers. Want to enforce a new security standard? Update the template. Every new service gets it automatically.

Links

⚔️ The Showdown: Crossplane vs Terraform

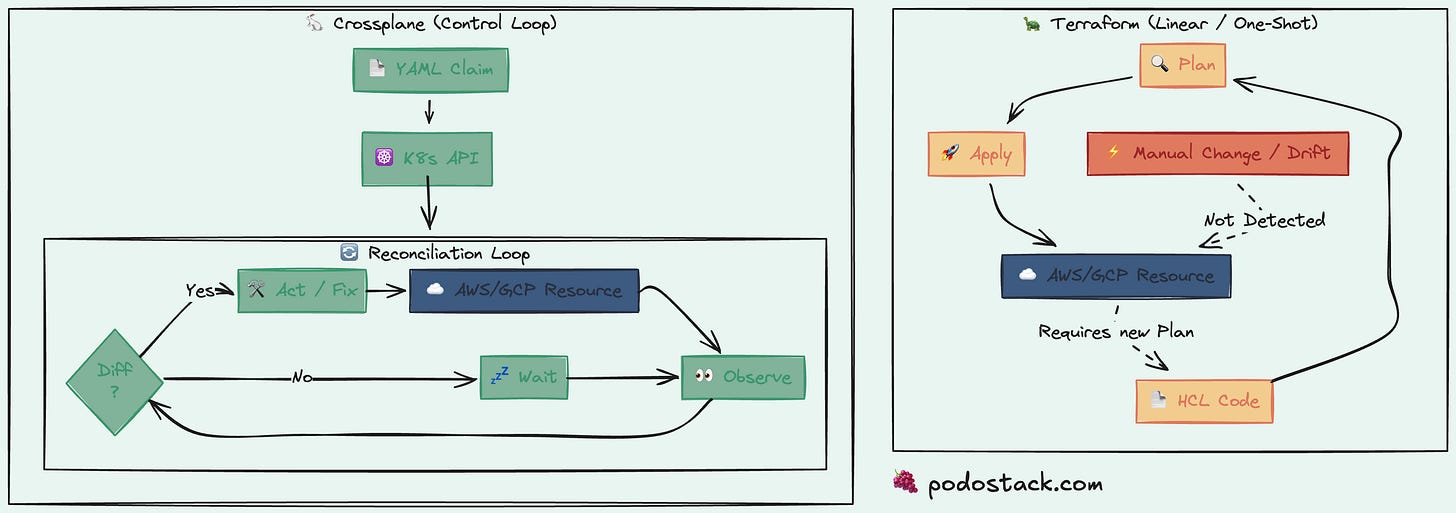

Both manage your cloud infrastructure. Completely different philosophies.

Terraform (the standard)

You write HCL files. You run terraform plan. You review the diff. You run terraform apply. Done.

It’s simple, well-understood, and has providers for everything. But it’s a one-shot operation. Between applies, nothing watches your infrastructure. Someone deletes a resource manually? Terraform doesn’t know until your next plan.

Crossplane (the K8s-native approach)

Crossplane runs inside your cluster. You define a custom resource — say, PostgreSQLCluster — and Crossplane’s controllers continuously reconcile it against reality. Just like how Kubernetes reconciles Deployments.

apiVersion: platform.acme.com/v1alpha1

kind: PostgreSQLCluster

metadata:

name: orders-db

spec:

version: "15"

storageGB: 100

environment: productionThe developer doesn’t know (or care) whether this creates an RDS instance, a Cloud SQL database, or something else. The platform team defines that mapping in a Composition.

When to choose what

Terraform — you have a small team, simple infrastructure, or you’re early in your platform journey. It’s proven and everyone knows it.

Crossplane — you’re building a self-service platform. You want developers to request infrastructure through Kubernetes APIs without filing tickets. You need continuous reconciliation, not just plan-apply.

They’re not competitors at the same maturity level. They’re tools for different stages.

Links

👮 The Policy: Require PodDisruptionBudget

Node drain. Three replicas. No PDB. All pods evicted at once. Service down.

This Kyverno policy prevents exactly that. If your Deployment has more than one replica, it must have a matching PDB. Otherwise, the API server rejects it.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: require-pdb

spec:

validationFailureAction: Enforce

background: false

rules:

- name: check-for-pdb

match:

any:

- resources:

kinds:

- Deployment

- StatefulSet

preconditions:

all:

- key: "{{ request.object.spec.replicas }}"

operator: GreaterThan

value: 1

context:

- name: matchingPDBs

apiCall:

urlPath: "/apis/policy/v1/namespaces/{{request.object.metadata.namespace}}/poddisruptionbudgets"

jmesPath: "items[].spec.selector.matchLabels"

validate:

message: >-

Deployment with {{ request.object.spec.replicas }} replicas

requires a matching PodDisruptionBudget.

deny:

conditions:

all:

- key: "{{ request.object.spec.template.metadata.labels }}"

operator: NotIn

value: "{{ matchingPDBs }}"This is a guardrail in action — exactly what the first section describes. No documentation needed. The cluster enforces it.

Links

🛠️ The One-Liner: Check Kubernetes EOL

curl -s https://endoflife.date/api/kubernetes.json | jq ‘.[0]’Platform teams have to track version support. endoflife.date aggregates EOL data for hundreds of products. This command shows the latest Kubernetes release with its support dates.

Useful for audits, upgrade planning, or just checking whether your cluster version is still getting patches.

Bookmark the API. It covers everything — Node.js, PostgreSQL, Ubuntu, you name it.

Questions? Feedback? Reply to this email. I read every one.

🍇 Podo Stack — Ripe for Prod.